Safer Internet Day

It’s obscene. Every 4 minutes analysts working for The Internet Watch Foundation in Cambridge remove an online photo of a child suffering sexual abuse. 1% of those children are 0-2 and 48% of the images feature children aged 7-10.

The IWF will be 25 years old this year and after a quarter of a century their work is needed more than ever. They work with law enforcement, commercial and the voluntary sector. Their job is to take down child sexual abuse images and videos from the web and pass vital information to the police to help them rescue victims and stop abusers. Much of their information comes via their hotline where members of the public can report these images while remaining safely anonymous.

Zero tolerance

In the world of online safety, the IWF is unusual. In most countries the web is a free for all and there are relatively few barriers to publishing violent images. Some companies are loath to intervene and take down illegal or distressing content because they fear a loss of advertising revenue; in other countries complainants have to get a court order to remove this material.

Britain is different. The IWF has a Memorandum of Understanding which means it is empowered to take immediate action. Typically, it takes down content within two hours of its being reported.

We also have a government that is willing to promote and enforce internet regulation. The Online Harms Bill requires platforms to abide by a code of conduct, currently in interim form, that sets out their responsibilities towards children and issues strict guidelines about removing illegal content such as child sexual abuse, terrorist material and media that promotes suicide. While some companies have done much to safeguard their users and led the way with technical innovations to block content, it has been very much a voluntary code up until now.

Dangers of lockdown

‘Lockdown has led to a perfect storm,’ says Susie Hargreaves, CEO of the IWF, ‘with children at home with access to the internet and perpetrators at home with more free time.’

During a one-month period at the start of the first lockdown in 2020, three IWF Member companies successfully blocked and filtered at least 8.8 million attempts by UK internet users to access videos and images of children suffering sexual abuse. They received 44,809 reports from members of the public between March 23 and July 9, 2020, a 50% increase over the same period in 2019.

These are big numbers but in a Covid world we are all numbed by statistics. Let’s have a story instead, courtesy of Sky News: ‘Olivia, who was raped and sexually tortured as a child. Her abuse began at the age of three and she was rescued from her abuser in 2013 at the age of eight, according to the IWF.’

Although the abuser, known to the child was jailed, the images persisted online. The IWF told Sky News: ‘…we counted the number of times we saw Olivia’s image online during a three-month period. We saw her at least 347 times. On average, that’s five times each and every working day. In three out of five times she was being raped, or sexually tortured. Some of her images were found on commercial sites. This means that in these cases, the site operator was profiting from this child’s abuse.’

Why can’t we stop the abuse?

Partly this is because of the technology. Partly it is supply and demand. No matter what restrictions are put in place, criminals will always find ways to access new secret and secure areas. TOR is a case in point. Originally developed as a project to protect US intelligence, it was later released as open-source software for people concerned about data privacy. TOR has many ‘legitimate’ users including investigative journalists, researchers as well as criminals. It offers a free private browser for Windows that protects users from online surveillance and tracking.

Every time developers come up with a solution to filter out child sexual abuse content, another developer comes up with a way to circumvent it.

However, technology can also help. While no single technology solution works across all platforms, companies are sharing third party solutions that can identify where images are held and providing it to smaller companies and start ups that could not afford to fund such developments

Working with other countries

In June 1996, the first internet hotline dealing with child sexual abuse material was set up in the Netherlands by people in the internet industry with the support of the police. This was quickly followed by initiatives in Norway, Belgium and the UK. INHOPE is an organisation which has 47 hotlines around the world: in all EU member states, Russia, South Africa, North & South America, Asia, Australia and New Zealand.

Some argue that once a country makes it clear it is operating an environment hostile to child sexual abuse material, it just drives it underground. The internet has no national borders and once it is outlawed in one part of the globe it springs up elsewhere. According to the latest statistics published at the beginning of the last lockdown. While less than half a percent of child sexual abuse material is on British servers, some 300,000 people in the UK pose a sexual threat to children on and offline.

However, once organisations form coalitions and work with other governments, national and international law enforcement agencies and major companies, , there would be nowhere for perpetrators to go.

Some good news

Wealthy countries such as the USA and Canada have both the infrastructure and the money to tackle some of these issues. The National Center for Missing & Exploited Children was founded in 1984, following a series of high profile child abductions. They operate the CyberTipline which was established by Congress to process reports of child sexual exploitation. Anyone can make a report to the CyberTipline but some digital service providers must file a report if they become aware of the presence of child sexual abuse material on their systems. Many providers now actively seek and report such sites and in 2018, the CyberTipline processed 18.4 million reports.

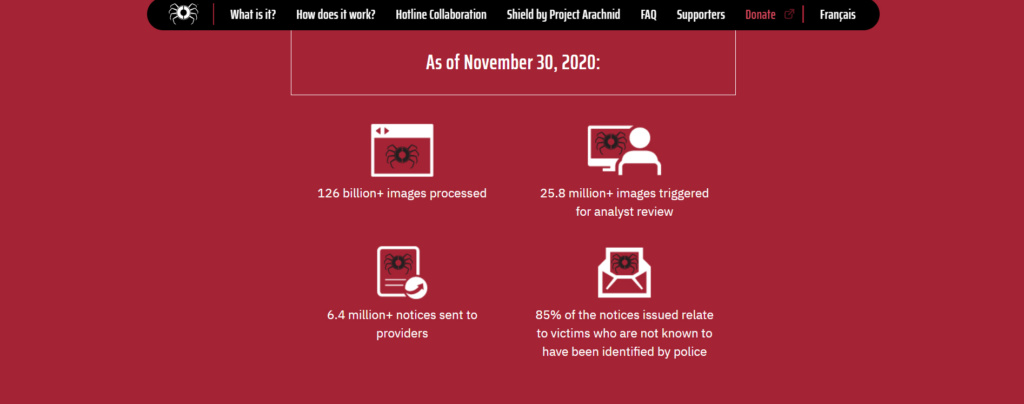

Project Arachnid is run by the Canadian Centre for Child Protection. It is an automated web crawler and platform that helps reduce the online availability of child sexual abuse material around the world. It can process thousands of images a second. It uses lists of digital fingerprints (hashes) to search for known illegal images and sends removal notices to the websites that host them, to ensure all instances of these images are removed from the open web.

Firms, including social media companies, will be able to plug Project Arachnid into their system to identify and flag for removal any indecent imagery even in ‘closed environments’ that only users and the company can see. Soon Project Arachnid will be able to include hashes supplied by the Canadian CyberTipline and the US National Center for Missing and Exploited Children. This means the number of images Project Arachnid can identify will be in the hundreds of thousands.

Shout out to hackers

One of the most commonly cited solutions is to recruit more hackers to defend us, so called ‘ white hat’ staff who have the combination of skills experience and creativity to devise unexpected and original solutions.

IWF hosts hackathons where it poses genuine challenges to a room (virtual or in person) of people who excel in their chosen technical field and who want to give a bit back. It allows some of the best minds to collaborate on some of the toughest challenges to help the IWF remain one step ahead of the offenders.

What can we do?

People shut down when faced with the issue of sexual abuse and child exploitation but it is so prevalent in society that we need to accept that we have a major problem. Most of us feel powerless and overwhelmed by the size of the problem but we must not lose sight of the remarkable successes that agencies around the world are managing on limited resources. A first step would be to make others aware of their work, to petition that their work is exempt from cuts and to press for the speedy enactment of laws that protect children.

In the last few years, we have seen the extraordinary impact of the #MeToo and #BLM movements which have kickstarted conversations about sexual harassment and racial intolerance. Child sexual abuse material does not have to be the inevitable result of the internet. In the future hopefully attitudes will have changed, and people will look back and think, ‘Why did they let that happen?’

Advice from the IWF:

- Do report images and videos of child sexual abuse to the IWF to be removed. Reports to the IWF can be made anonymously.

- Do provide the exact URL where child sexual abuse images are located.

- Don’t report other harmful content – you can find details of other agencies to report to on the IWF’s website.

- Do report to the police if you are concerned about a child’s welfare,

- Do report only once for each web address – or URL. Repeat reporting of the same URL isn’t needed and wastes analysts’ time.

“Your Photo Fate” Discussion Guide (missingkids.org)

https://www.iwf.org.uk/what-we-do/how-we-assess-and-remove-content

IWF Podcasts | Pixels from a Crime Scene

Register for free

No Credit Card required

- Register for free

- Free TeachingTimes Report every month